This page is supposed to give a short overview on the available systems from a hardware point of view. All hardware can be reached through a login node via SSH: deep@fz-juelich.de. The login node is implemented as virtual machine hosted by the master nodes (in a failover mode). Please, see also information about getting an account and using the batch system.

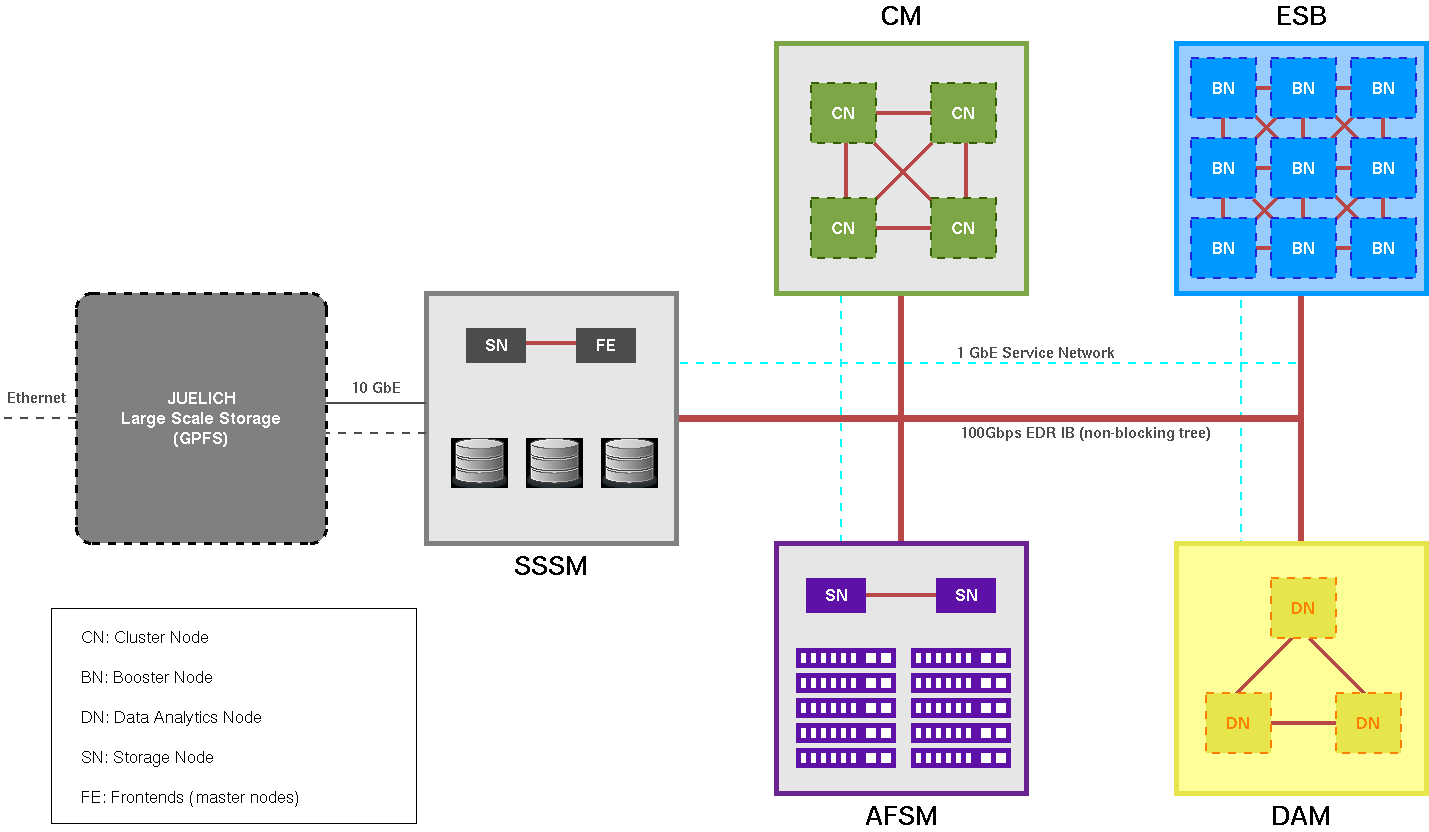

The DEEP-EST system is a prototype of Modular Supercomputing Architecture (MSA) consisting of the following modules:

In addition to the three compute modules, a Scalable Storage Service Module (SSSM) provides shared storage infrastructure for the DEEP-EST prototype (/usr/local) and is accompanied by the All Flash Storage Module (AFSM) leveraging a fast local work filesystem (/afsm) on the compute nodes.

All modules are connected via a 100 Gbp/s EDR IB network in a non-blocking tree topology. In addition the system is connected to the Jülich storage system (JUST) to share home and project file systems with other HPC systems hosted at Jülich Supercompting Centre (JSC).

It is composed of 50 nodes with the following hardware specifications:

|

It is composed of 75 nodes with the following hardware specifications:

|

It is composed of 16 nodes with the following hardware specifications:

|

It is based on spinning disks. It is composed of 4 volume data server systems, 2 metadata servers and 2 RAID enclosures. The RAID enclosures each host 24 spinning disks with a capacity of 8 TB each. Both RAIDs expose two 16 Gb/s fibre channel connections, each connecting to one of the four file servers. There are 2 volumes per RAID setup. The volumes are driven with a RAID-6 configuration. The BeeGFS global parallel file system is used to make 292 TB of data storage capacity available.

Here are the specifications of the main hardware components more in detail:

|

It is based on PCIe3 NVMe SSD storage devices. It is composed of 6 volume data server systems and 2 metadata servers interconnected with a 100 Gbps EDR-InfiniBand fabric. The AFSM is integrated into the DEEP-EST Prototype EDR fabric topology of the CM and ESB EDR partition. The BeeGFS global parallel file system is used to make 1.3 PB of data storage capacity available.

Here are the specifications of the main hardware components more in detail:

|

Currently, different types of interconnects are in use along with the Gigabit Ethernet connectivity that is available for all the nodes (used for administration and service network). The following sketch should give a rough overview. Network details will be of particular interest for the storage access. Please also refer to the description of the filesystems.

network is going to be formed to an "all IB EDR" setup soon !

This is a sketch of the available hardware including a short description of the hardware interesting for the system users (the nodes you can use for running your jobs and that can be used for testing).

This rack hosts the master nodes, file servers and the storage as well as network components for the Gigabit Ethernet administration and service networks. Users can access the login node via deep@fz-juelich.de (implemented as virtual machine running on the master nodes). The rack is air-cooled.

Contains the hardware of the DEEP-EST Cluster Module including compute nodes, a management node for this module, network components and a liquid cooling unit.

This rack hosts the compute nodes of the Data Analytics Module of the DEEP-EST prototype, a management node for this module, network components and 4x BXI test nodes plus switch. The rack is air-cooled.

Along with the prototype systems serveral test nodes and so called software development vehicles (SDVs) have been installed in the scope of the DEEP(-ER,EST) projects. These are located in the SDV rack (07). KNL and ml-GPU nodes can be accessed by the users via SLURM. Access to the remaining SDV nodes can be given on demand:

protodam[01-04]

deeper-sdv[01-10]

knl[01,04-06]

ml-gpu[01-03]